Fujifilm tried it with the X-Pro1 at the beginning of 2012 and carried on with the X-E1 in autumn 2012 and the X20 early 2013. Nikon's D800E had a go, too. Pentax did it with its K-5 II s in 2012 and recently its K-3. The Olympus OM-D E-M1 went without, and so did the Ricoh GR, Sony's RX1R, and the newly announced Sony A7R and Nikon D5300. What sort of fire have these manufacturers been playing with? They have all omitted the optical low-pass filter, or anti-aliasing filter, from their cameras.

But what was an optical low-pass filter, why did we used to need them, and why can our cameras suddenly cope without them?

Low-pass filters

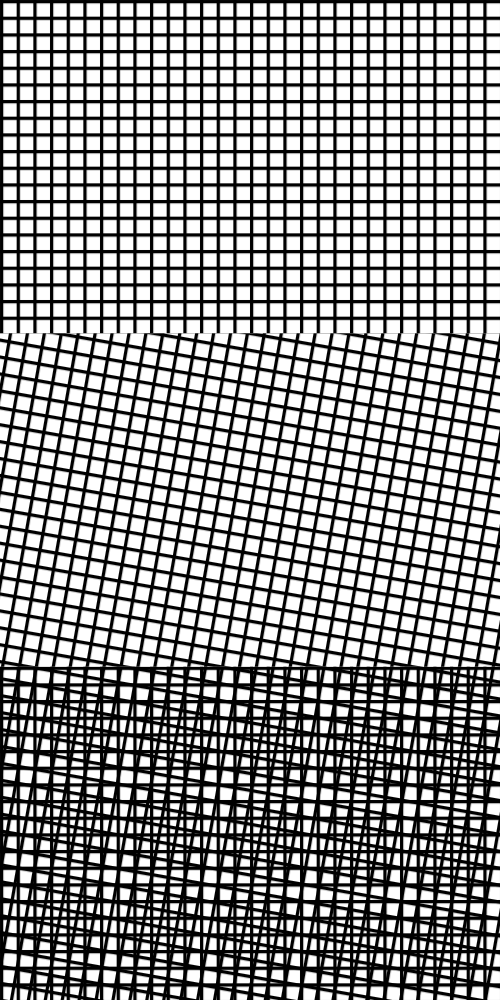

A low-pass filter comprises several layers of optical quartz that have been cemented together and placed in front of a camera's sensor. They were put there to help prevent the appearance of moiré in images, or that odd effect when anything with a close pattern, for example ties or denim, would appear to be swirling and fuzzing, almost like the screen of an untuned television set or an animated gif. Moiré can be hugely distracting.

Moiré

If you're familar with the concept of temporal aliasing in video, when spinnning wheels sometimes look as if they're going backwards, moiré isn't too far removed from that.

The pixels in a camera's sensor are arranged, somewhat logically, in a grid. If you were to photograph a subject that also has a close-knit grid-like pattern there's a significant chance that the pixel-grid and the pattern-grid won't align perfectly, leading to a jumpy, swirly clash of lines of pixels and lines of pattern. Imagine one patterned transparency placed over another, but with the patterns mis-aligned. It's called aliasing.

To prevent this from happening, camera manufacturers placed optical low-pass filters—or anti-aliasing filters—before their sensors. These filters worked by softening and blurring the image a touch, reducing the effect of the moiré. If you shot in JPEG you might not have noticed this added softening because the camera would compensate for it, but for anyone who favoured Raw, you would notice the need the sharpen your images. It also ate into the detail that your sensor could record, too.

What's changed, then? Why can we now do without these filters?

To a degree increased pixel density has helped to create a situation where moiré doesn't happen anymore, or at least it happens less frequently. The more pixels there are on the sensor, the less likely they are to form a grid of a size that will clash with a subject's patterning.

Add editing software that is more capable of dealing with moire to moiré's less frequent appearances, and the filters become less desirable, especially when they eat into an image's resolution and sharpness.

Finally, manufacturers such as Fujifilm are moving away from sensors with rigidly aligned pixels to slightly more randomly distributed pixels, as you'd find on film. This negates the propensity for grids and lines to clash.

Better without the low-pass filter because...

... it should improve colour rendition, sharpness, and detail in your photos.

If all they did was remove the filter, why were the Nikon D800E, Pentax K-5 II S, and Sony's RX1R and A7R more expensive than their filtered counter-parts?

It wasn't quite as simple as removing the filter. The filters needed to be replaced because they performed other functions, too, for example acting as infrared filters. New technology isn't always cheap. Sony's kept with the 'One-with, one-without' option for its new full-frame mirror-less camera, the A7 and A7R, but Nikon's D5300 is low-pass filter-free and so is the Ricoh Pentax K-3. It seems this is way of things to come.